Evolution of Computers

Contents

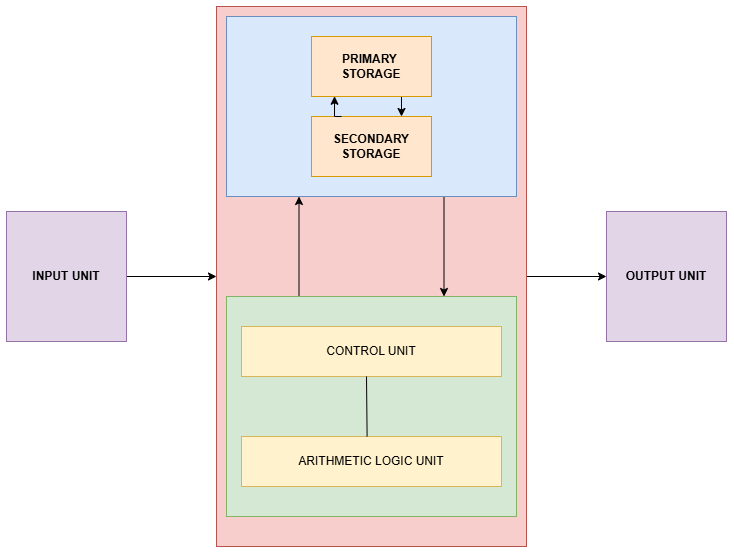

ToggleThe evolution of computers took five generations, from transforming room sized machines to AI-powered devices. Each stage marked breakthroughs in hardware, shrinking size, boosting speed, and enabling new uses from scientific calculations to voice assistants.

This historical journey began post-WWII and continues today, powering our smartphone’s apps. It shows how Von Neumann principles scaled through tech shifts. Early computers cracked codes in war and now they predict weather.

First Generation (1940-1956): Vacuum Tube Era

First-generation computers relied on vacuum tubes for performing computations and magnetic drums for data storage. Vacuum tubes consumed massive amount of energy (up to 150kW) and got heated up making them break down frequently, needing constant repairs. This resulted in low reliability. They used machine language and punch cards for input. Also the magnetic storage drums stored very small amount of data. An operator used to feed the machines with instruction after instruction in a manual way by configuring certain switches and wires for data and control transmission.

Key examples include ENIAC (1945, 18,000 tubes, weighed 30 tons) for artillery calculations and UNIVAC I (1951), first commercial computer predicting US elections. Speed reached milliseconds per operation, but unreliability limited use to labs. Real-life: ENIAC computed trajectories, aiding WWII victory.

Second Generation (1956-1963): Transistor Revolution

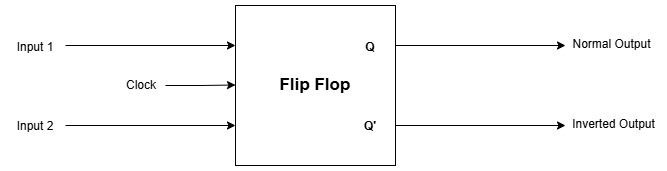

In this era the Transistors were invented. Transistors are tiny semiconductor switches. These were invented by William Shockley, Walter Brattain and John Bardeen at Bell Labs in 1947, which replaced vacuum tubes, reducing the size by 10x and reducing power use. It marked the start of the second generation computers. These second generation computers used assembly languages and more sophisticated programs could be written. Computers became reliable for business.

IBM 1401 dominated offices for billing; CDC 1604 handled science. Processing jumped to microseconds. Real-life: Airlines used them for reservations, cutting manual errors.

Third Generation (1964-1971): Integrated Circuits Boom

In this era, Integrated Circuit (IC) were invented. It was invented by Jack Kilby in 1958 which were miniaturized further, enabling minicomputers. These generation computers were more powerful , energy efficient than second generation computers and the OS could run many application programs using multiprogramming. Peripheral devices such as Monitors and keyboards were being used.

IBM System/360 unified families; PDP-8 birthed minis. Nanoseconds speed; keyboards/monitors appeared. Real-life: Banks automated checks via IC efficiency.

Fourth Generation (1971-Present): Microprocessor Age

In this generation , microprocessors were introduced. The fourth generation computers are modern day computers. These computers were reduced in size increasing the computation power and efficiency than the other three generations. It also decreased the cost of computers. Microprocessors (VLSI chips like Intel 4004, 1971) packed CPU on one chip, birthing PCs. GUIs, internet, laptops exploded. Languages: C, Java. Portable, affordable (under $1000). In this generation networking of computers evolved which led to sharing of data among devices spread at different locations and LAN got introduced in every computer.

IBM PC (1981), Apple Macintosh revolutionized homes. Gigahertz speeds; billions of transistors. Real-life: Internet browsing, gaming. The code compiler runs here.

Fifth Generation (1980s-Present): AI and Beyond

Fifth-gen focuses on AI, ULSI (millions of transistors), parallel processing, voice recognition. Japan’s FGCS project aimed at inference machines; now quantum, neural nets dominate. Natural language, robotics key.

Examples: IBM Watson (Jeopardy winner), modern GPUs for deep learning. ChatGPT understands queries; self-driving cars process images parallelly.

FAQ

What defined first-generation computers?

Vacuum tubes, huge size, machine language—ENIAC example.

How did transistors change computing?

Made second-gen smaller, reliable, faster for business use.

Role of ICs in third generation?

Shrunk components, enabled time-sharing OS.

Why microprocessors pivotal in fourth gen?

Created affordable PCs, sparked internet era.

What tech powers fifth generation?

AI, parallel processing, ULSI for natural language.